Current Research

Under Construction 🙂 , in the meantime see Publications page

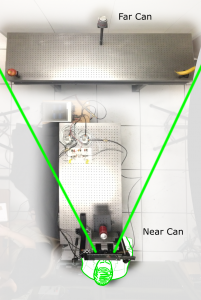

Desktop 3D Telepresence

Egocentric Body Pose Tracking

Eye Tracking with 3D Pupil Localization

Holographic Near-Eye Display

Novel View Rendering using Neural Networks

Past Research

|

|

Dynamic Focus Augmented Reality DisplaySome of the fundamental limitations of existing near eye displays for augmented reality are limited field of view, low angular resolution, and fixed accommodative state. We tackle the problem of providing wide FOV and accommodative cues together in the context of see-through and varifocal systems. We propose a new hybrid hardware design for NEDs that uses see-through deformable membrane mirrors. Our work promises to address Vergence-Accommodation Conflict caused by lack of accommodative cues. |

|

|

|

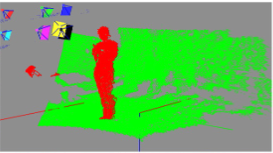

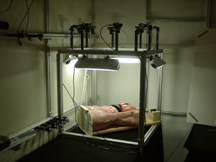

Immersive Learning Experiences from 3D Reconstruction of Dynamic ScenesIn this project, we investigate 3D reconstruction of room-sized dynamic scenes (i.e., containing moving humans and objects) with a quality and fidelity significantly higher than currently possible. These improvements will enable immersive learning of rare and important situations through post-event, annotated, guided virtual reality experiences. Examples of such situations include emergency medical procedures. We expect to have a significant impact on society by enabling such immersive experiences for activities currently requiring live physical presence. |

|

|

|

Low Latency DisplayWe are developing a low latency tracking and display system suitable for ultra-low latency, optical see-through, augmented reality, head mounted displays. Our display runs at a very high frame rate, and performs in-display corrections on supplied imagery to account for the latest tracking information and the latency in a standard rendering system. |

|

|

Pinlight DisplayWe present a novel design for an optical see-through augmented reality display that offers a wide field of view and supports a compact form factor approaching ordinary eyeglasses. We demonstrate feasibility through software simulations and a real-time prototype display that offers a 110◦ diagonal field of view in the form factor of large glasses and discuss remaining challenges to constructing a practical display. |

|

|

Office of the FutureThis work is based on a unified application of computer vision and computer graphics in a system that combines and builds upon the notions of panoramic image display, tiled display systems, image-based modeling, and immersive environments. Our goals include a better everyday graphical display environment, and 3D tele-immersion capabilities that allow distant people to feel as though they are together in a shared office space |

|

|

3D Telepresence for Medical ConsultationThe project will develop and test 3D telepresence technologies that are permanent, portable and handheld in remote medical consultations involving an advising healthcare provider and a distant advisee. The project will focus on barriers to 3D telepresence, including real time acquisition and novel view generation, network congestion and variability, and tracking and displays for producing accurate 3D depth cues and motion parallax. |

|

|

|

Wall-to-Wall TelepresenceThe system enabled visualization of large 3D models on a multi-screen 2D display on a single wall for telepresence applications. We experimented with large datasets, such as reconstructed models of the Roman Colosseum, and dense datasets, such as highly detailed models of an indoor room, and found the visualization to be very convincing for a single user. We experimented with a number of ways to achieve a satisfactory visual experience for multiple users, but none of our methods provided by the realism and the experience required of modern-day telepresence systems. |

|

|

|

Multi-view TeleconferencingWe developed a two-site teleconferencing system that supports multiple people at each site while maintaining gaze awareness among all participants and provides unique views of the remote sites to each local participant. |

|

|

|

AvatarWe developed a one-to-many teleconferencing system in which a sued inhabits an animatronic shader lamps avatar at a remote site. The system allows the user to maintain gaze awareness with the other people at the remote site. |

|

|

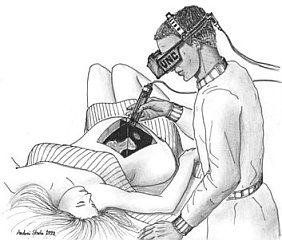

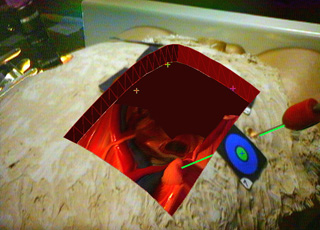

Ultrasound/Medical Augmented Reality ResearchOur research group is working to develop and operate a system that allows a physician to see directly inside a patient, using augmented reality (AR). AR combines computer graphics with images of the real world. This project uses ultrasound echography imaging, laparoscopic range imaging, a video see-through head-mounted display (HMD), and a high-performance graphics computer to create live images that combine computer-generated imagery with the live video image of a patient. |

|

|

Pixel-Planes & PixelFlowSince 1980, we have been exploring computer architectures for 3D graphics that, compared to today’s systems, offer dramatically higher performance with wide flexibility for a broad range of applications. A major continuing motivation has been to provide useful systems for our researchers whose work in medical visualization, molecular modeling, and architectural design exploration requires graphics power far beyond that available in today’s commercial systems. We are now focusing on image-based rendering, with the goal of designing and building a high-performance graphics engine that uses images as the principal rendering primitive. Browse our Web pages to learn more about our research, the history of the group, publications, etc. |

|

|

TelecollaborationTelecollaboration Overview Telecollaboration is unique among the Center’s reseach areas and driving applications in that it truly is a single multi-site, multi-disciplinary project. The goal has been to develop a distributed collaborative design and prototyping environment in which researchers at geographically distributed sites can work together in real time on common projects. The Center’s telecollaboration research embraces both desktop and immersive VR environments in a multi-faceted approach that includes:

|

|

|

3D Laparoscopic VisualizationWe present the design and a prototype implementation of a three-dimensional visualization system to assist with laparoscopic surgical procedures. The system uses 3D visualization, depth extraction from laparoscopic images, and six degree-of-freedom head and laparoscope tracking to display a merged real and synthetic image in the surgeon’s video-see-through head-mounted display. We also introduce a custom design for this display. A digital light projector, a camera, and a conventional laparoscope create a prototype 3D laparoscope that can extract depth and video imagery. |

|

|

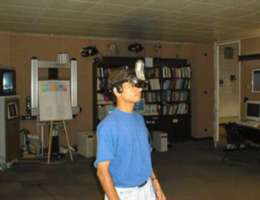

Wide-Area TrackingHead-mounted displays (HMDs) and head-tracked stereoscopic displays provide the user with the impression of being immersed in a simulated three-dimensional environment. To achieve this effect, the computer must constantly receive precise six-dimensional (6D) information about the position and orientation or pose of the user’s head, and must rapidly adjust the displayed image(s) to reflect the changing head locations. This pose information comes from a tracking system. We are working on wide-area systems for the 6D tracking of heads, limbs, and hand-held devices. |

|

|

Image-Based RenderingIn the pursuit of photo-realism in conventional polygon-based computer graphics, models have become so complex that most of the polygons are smaller than one pixel in the final image. At the same time, graphics hardware systems at the very high end are becoming capable of rendering, at interactive rates, nearly as many triangles per frame as there are pixels on the screen. Formerly, when models were simple and the triangle primitives were large, the ability to specify large, connected regions with only three points was a considerable efficiency in storage and computation. Now that models contain nearly as many primitives as pixels in the final image, we should rethink the use of geometric primitives to describe complex environments. We are investigating an alternative approach that represents complex 3D environments with sets of images. These images include information describing the depth of each pixel along with the color and other properties. We have developed algorithms for processing these depth-enhanced images to produce new images from viewpoints that were not included in the original image set. Thus, using a finite set of source images, we can produce new images from arbitrary viewpoints. |

|

|

Wide Area Visuals with ProjectorsThis project looks to develop a robust multi-projector display and rendering system that is portable, and rapidly set up and deployed in a variety of geometrically complex display environments. Our goals include seamless geometric and photometric image projection, and continuous self-calibration using one or more pan-tilt-zoom cameras. Basic Calibration Method Rendering System Continuous Calibration using Imperceptible Structured Light |

|